- 签证留学 |

- 笔译 |

- 口译

- 求职 |

- 日/韩语 |

- 德语

The next level in the hierarchical cascade of quality standards is occupied by "quantitative quality measurement procedures" , leading to the generation of so-called "quality metrics." Metric-related expressions of translation adequacy yield formal quantifiable numerical values by providing lists of critical characteristics, which are weighted numerically in order to objectify any errors that may be present. Two accessible approaches include the SAE metric specifically for “automotive service information" (SAE J 2540: 2001) and the American Translators Association (ATA) framework for grading the ATA certification examination. Ongoing projects in LISA and in the Localization Institute address the quantification of source text/content scope and quality, target product quality, the localization process, and standard productivity factors, all of these activities being categorized by their work groups as "metrics."

1. The ILR Scale. The original Interagency Language Roundtable (ILR) Language Proficiency Skill Level Descriptions provided a scale of 1-5 for evaluating foreign language professionals working for the federal government – NATO offers its own variant. The descriptions generally relate individual skill levels on the part of language professionals to specified tasks and so-called text difficulty levels. Performance criteria are listed in considerable detail for a progression of linguistic skills related to speaking, listening, reading, and writing, but these guidelines do not address any predictive relations between the ILR language skills ratings and translation-related performance skills. Indeed, the kinds of linguistic competence described by the ILR rating scale have comprised only a baseline on which actual language mediation and localization-related competences could be built. Thus the ILR scale has not been directly relevant to the needs of industry (or to government actually, for that matter). In order to meet this criticism, the ILR has developed provisional descriptions for translation skill levels, which start at very low levels for simple content recognition and progress to the ability to handle complex linguistic and cultural content in a variety of special subject fields. Within this broad range, only the top two levels are probably acceptable in the private sector, but it should be noted that the high volume of work required in government and national security environments coupled with the sometimes uncommon language combinations demanded in these areas results in the need to use some language professionals with otherwise marginal skills to perform triage and to route critical documents for translation by more competent translators.

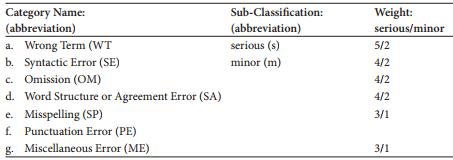

2. SAE J 2450:2001. SAE J2450 is an SAE Recommended Practice whose scope is to develop a metric for the evaluation of translation quality for service documentation in the automotive industry, regardless of the source or the target language, and regardless of how the translation is performed, i.e., human or machine translation. The standard identifies seven critical categories (see Table 1), each rated in terms of severity on an individually specified scale from 5 (severe) to 1 (trivial). While the SAE standard is important as a product quality metric, it does not address style, intertextuality, cultural significance, etc., all of which are critical in localization. The standard can be used to evaluate translation product in an industrial setting in order to select and audit translation providers. It can, for instance, be used to rate translation suppliers in order to cut cost and effort by replacing 100% inspection with supposedly “reliable" random sample editing checks.

Table 1. SAE J 2450: Error categories, classifications, and weights.

3. The ATA Framework for Standard Error Marking. The ATA Framework (see Table 2) was developed by the American Translators Association Certification Committee in order to improve consistency in the ATA certification process and to render the system more transparent (ATA 2004). The exam is designed as a summative measure of translation expertise. Nevertheless, ATA grading practices are being adopted and adapted by academics for use in formative situations (see Language industry standards Doyle 2003, as well as Baer and Koby 2003). The ATA CIL includes 22 criteria and five weighting levels, providing a far more comprehensive evaluative tool than the SAE metric, but this higher level of complexity reflects the fact that it is designed for assessing a full range of text types and subject matters.