Another feature that may be included in some TMSs is a term-extraction tool, which is sometimes referred to as a term-recognition or term-identification tool. Most term-extraction tools are monolingual, and they attempt to analyze source texts in order to identify candidate terms. However, some bilingual tools are being developed that analyze existing source texts along with their translations in an attempt to identify potential terms and their equivalents. This process can help a translator build a term base more quickly; however, although the initial extraction attempt is performed by a computer, the resulting list of candidates must be verified by a human, and therefore the process is best described as being computer-aided or semi-automatic rather than fully automatic.

Unlike the word-frequency lists, term-extraction tools attempt to identify multi-word units. There are two main approaches to term extraction: linguistic and statistical. For clarity, these approaches will be explained in separate sections; however, aspects of both approaches can be combined in a single term-extraction tool.

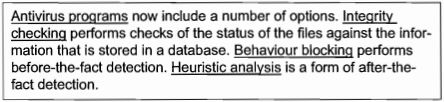

Figure 1 A short text that has been processed using a linguistic approach to term extraction.

Figure 2 A slightly modified version of the text that has been processed using a linguistic approach to term extraction.

1. Linguistic approach

Term-extraction tools that use a linguistic approach typically attempt to identify word combinations that match particular part-of-speech patterns. For example, in English, many terms consist of NOUN+NOUN or ADJECTIVE+NOUN combinations. In order to implement such an approach, each word in the text must first be tagged with its

appropriate part of speech. Once the text has been correctly tagged, the term-extraction tool simply identifies all

the occurrences that match the specified part-of-speech patterns. For instance, a tool that has been programmed to identify NOUN+NOUN and ADJECTIVE+NOUN combinations as potential terms would identify all lexical combinations matching those patterns from a given text,as illustrated in figure 1. Unfortunately, not all texts can be processed this neatly. If the text is modified slightly, as illustrated in figure 2, problems such as "noise"and "silence" become apparent.

First, not all of the combinations that follow the specified patterns will qualify as terms. Of the NOUN+NOUN and ADJECTIVE+NOUN candidates that were identified in figure 4.4, some qualify as terms ("antivirus programs," "integrity checking," "behaviour blocking," "heuristic analysis"), whereas others do not ("more options," "periodic checks," "current status," "stored information"). The latter set constitutes noise and would need to be eliminated from the list of candidates by a human.

Another potential problem is that some legitimate terms may be formed according to patterns that have not been pre-programmed into the tool. This can result in "silence" – a situation in which relevant information is not retrieved. For example, the terms "before-the-fact detection" and "after-the-fact detection" have been formed using the pattern PREPOSITION+ARTICLE+NOUN+NOUN; however, this pattern is not common and is not likely to be recognized by many term extraction tools.

上一篇:Benefits and Drawbacks of Working with a TMS

下一篇:Benefits and Drawbacks of Working with Corpus-Analysis Tools

微信公众号搜索“译员”关注我们,每天为您推送翻译理论和技巧,外语学习及翻译招聘信息。